[translated from the Russian version by Andrey V. Polyakov]

Recently, a new round of conversations about self-moving carriages and other potential achievements of artificial intelligence has again posed one of the classic questions of humanity: who will be marginalized by the monorail track of the next technological revolution? Some studies argue that artificial intelligence in the near future would lead to a surge of unemployment comparable to the Great Depression. Today we will also talk about who can be replaced by computers in the near future, and who can, without fear and even with some degree of self-satisfaction, expect the arrival of our silicon overlords.

Luddites: senseless English riot or reasonable economic behavior?

Some people believe labor-saving technological change is bad for the workers because it throws them out of work. This is the Luddite fallacy, one of the silliest ideas to ever come along in the long tradition of silly ideas in economics.William Easterly. The Elusive Quest for Growth: Economists’ Adventures and Misadventures in the Tropics

Such a text could hardly do without recalling the most famous opponents of technological progress: the Luddites. Moods against technological progress were strong among English textile workers as far back as the 18th century, but Nottingham manufacturers did not receive letters on behalf of Ned Ludd until 1811. The trigger for the transition to active actions was the introduction of stocking machines, which made the skills of skilled weavers unnecessary: now stockings could be sewn of separate parts without special skills. The resulting product was, by the way, much worse, with stockings quickly bursting at the seams, but they were so much cheaper and they still enjoyed immense popularity. Fearing to lose work, the weavers began attacking factories and smashing newfangled machines.

Luddites were well organized. They understood that conspiracy was vital for them; they brought terrible oaths of loyalty, acted under the cover of night and were rarely apprehended. By the way, most probably General Ludd has never existed: it was a folklore “bigger than life” figure like Paul Banyan. The fight against Luddites was taken seriously: in 1812, “machine breaking” was proclaimed a capital crime, and there were more British soldiers suppressing uprisings than there were fighting Napoleon in the same years! Yet, the Luddites managed to significantly reduce automation in textile production, the prices for products increased, and the goals of the movement were partially achieved. But honestly, when did you last wear any hand-woven stockings, socks or pantyhose?…

We have placed a quote from the book of economist William Easterly as epigraph to this section. Easterly explains that the Luddite movement has spawned (no longer among English weavers, but in quite intellectual circles) the erroneous idea, which he calls the Luddite fallacy, and which still, from time to time, occurs to quite real economists. The idea is that the development of automation is supposed to inevitably lead to a reduction in employment, because fewer people are needed to maintain the same level of production. However, both theory and practice show that quite another outcome is no less likely: simply the same, or even a larger, number of people would produce more goods! And the progress is usually on the side of workers, increasing their productivity and, consequently, their income. Yes, the last consequence is not always that obvious, but there is no apparent way to increase the welfare of each individual worker.

Nevertheless, these arguments do not refute the Luddites themselves. Regardless of the rhetoric, the Luddites were afraid not of a bright future, in which every stockinger could make a hundred pairs of stockings a day, but of immediate tomorrow, when they were on the street and no one would need the only skill they possessed. Moreover, even Easterly does not deny that progress can lead to unemployment and decline of prosperity for certain workers, even if the average well-being of the people grows. Let us take a closer look at this argument.

Whom did the robots replace recently

…we set up this room with girls in it. Each one had a Marchant: one was the multiplier, another was the adder. This one cubed — all she did was cube a number on an index card and send it to the next girl… The speed at which we were able to do it was a hell of a lot faster… We got speed… that was the predicted speed for the IBM machine. The only difference is that the IBM machines didn’t get tired and could work three shifts. But the girls got tired after a while.Richard Feynman. Surely You’re Joking, Mr. Feynman!

An economist from Harvard James Bessen conducted an interesting study. He took a list of major 270 occupations used in the 1950 U.S. Census, and then checked how many had been completely automated so far. It turned out, that out of 270 occupations:

- 232 are active so far;

- 32 were eliminated due to decline of demand and changes in market structure (Bessen refers to, e.g., boardinghouse keepers, however, how would you call people occupied in Airbnb…);

- five became obsolete due to technological advances, but were never automated (for example, a telegraph operator);

- and only one occupation was really fully automated.

Those, willing to think, will have time until the next paragraph.

The only fully automated occupation in Bessen’s research is elevator operator. At this point, our 80+ readers who grew up in the U.S. can certainly sigh and complain that earlier warm colored boys closed behind you double elevator doors, which could not close themselves, and pressed warm lamp buttons… but was it so good for you, and for the colored boys themselves? Maybe they should go to school after all?…

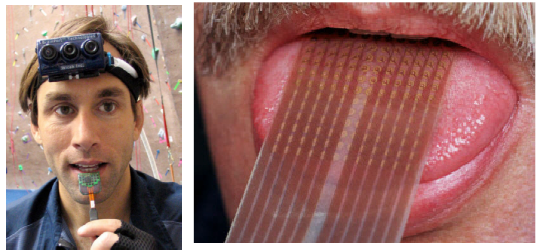

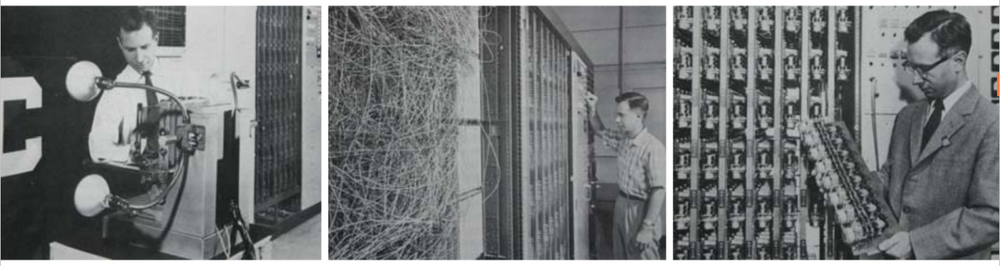

We can add another interesting career path to Bessen’s research. For quite a long time computers have completely replaced the occupation of… computer. Oddly enough, not everyone knows about the existence of this occupation, although it seems obvious that before the advent of computers it was necessary to calculate manually. Many great mathematicians were also outstanding computists: for example, Leonhard Euler could carry out complex calculations in his mind and often amused himself with some complicated exercise when his wife managed to get him to the theater. But how were the calculations made, for example, in the Manhattan project, where no physicist could have managed them on his own, “on paper”?

Well, they really did them manually. The main idea is described in the epigraph: when people perform operations sequentially, as if on a conveyor, it turns out much faster. Of course, humans are prone to error, and the results of the calculations had to be rechecked, but for this, special algorithms can also be developed. In the 19th century, human computers compiled mathematical tables (for example, sinuses or logarithms), and in the twentieth century they worked for military needs, including the Manhattan project. It is computists, by the way, who are responsible for the fact that the occupation of programmer was at first considered female: the computists were mostly girls (for purely sexist reasons — it was believed that women are better suited for monotonous tasks), and the first programmers were often recruited from them.

In his book When Computers Were Human, David Alan Grier describes the atmosphere of the work of living calculators, citing Dickens (another unexpected affinity to real Luddites): “A stern room, with a deadly statistical clock in it, which measured every second with a beat like a rap upon a coffin lid.” Should we be nostalgic for this patriarchal, but by no means bucolic picture?

Bessen distinguishes between complete and partial automation. Indeed, a hereditary elevator operator could have a hard time in a brave new world (there is only one problem: hereditary elevator operators apparently did not have enough time to grow). However, if only part of what you are doing is automated, the demand for your occupation can even increase. And again one can return to the Luddites: during the 19th century, 98% of the labor required to weave a yard of cloth was automated. Theoretically, it was possible to dismiss forty-nine weavers out of fifty and produce the same amount of cloth. However, the final effect was opposite: the demand for cheap cloth grew dramatically, resulting in demand for weavers, and, therefore, the number of jobs increased significantly. And in the 1990’s, the widespread deployment of ATMs did not reduced, but even increased the demand for bank tellers: ATMs allowed banks to operate branch offices at lower cost; this prompted them to open many more branches.

Despite numerous technological innovations, complete occupation automation in the last 50–100 years did not have a noticeable effect in the society. Do you know anyone whose grandfather was an elevator operator and grandmother a computer, who have lost jobs and sunk to the bottom of society because of the goddamned automation?

But partial automation over these years has radically changed the content of work for the vast majority of us. Computers and especially the Internet have made many professions many times more productive — can you imagine how long it would take me to collect data for this article in the 1950’s? And globalization and automation of economy tangibly raised the standard of living — for all, not just the elite. It would be trivial to say that we now live better than medieval kings did — but try to compare our standard of living with the way our grandparents lived. I will not give any examples or statistics, every reader has their own experience thereof: just recall your life before such trifles as mobile phones, Google, microwave ovens, dishwashers (and even washing machines were recently not in all households)…

Therefore, it seems that progress and automation have so far only helped people, and the Luddites’ fears were greatly exaggerated. But, maybe, “the fifth industrial revolution” will be absolutely different? In the second part of the article we will try to dream on this topic.

“The threat of automation”

How far has gone “progrès”? Laboring is in recess,

And mechanical, for sure, will the mental one replace.

Troubles are forgotten, no need for quest,

Toiling are the robots, humans take a rest.

Yuri Entin. From the movie Adventures of Electronic

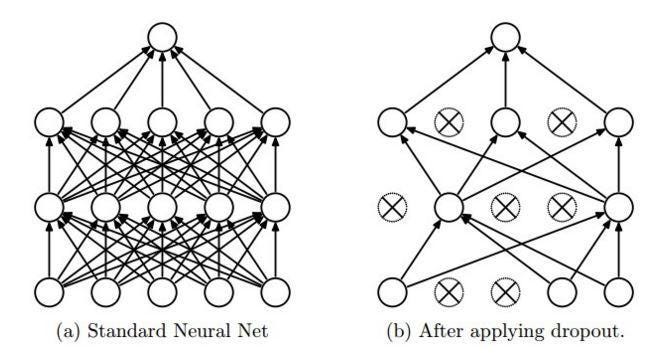

Elevator operators, computists and weavers perfectly symbolize those activities that have been automated so far: technical work, where the output result is expected to maximally match certain parameters, and creativity is not only difficult, but forbidden and, in fact, harmful. Of course, an elevator operator could smile to his passengers, or the computer girl could, having scorned potential damage to her reputation, get acquainted with Richard Feynman himself. But their function was to accurately perform clear, algorithmically defined actions.

I will allow myself a little emotion: these are exactly the kinds of activities that must be automated further! There is nothing human in following a fixed algorithm. Monotonous work with a predetermined input and output is always an extreme measure, the forced oppression of the human spirit in order to achieve certain practical goal. And if the goal can be achieved without wasting human time, that is the way to proceed.

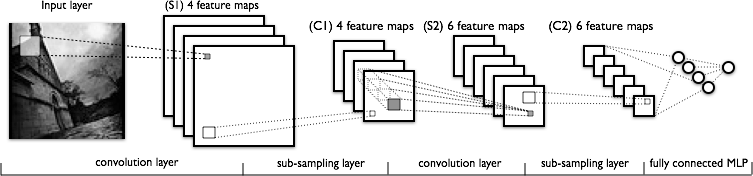

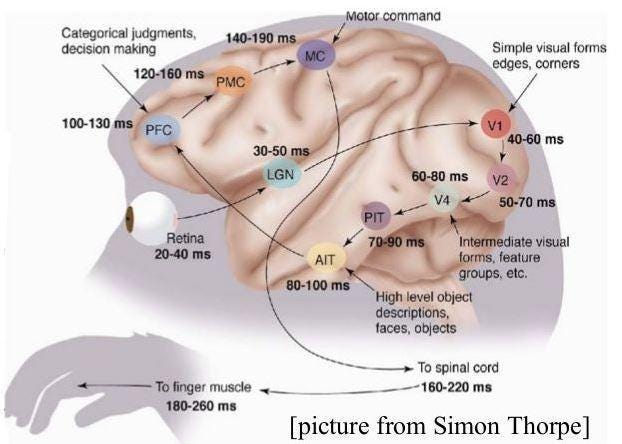

However, now it comes to the fact that computers start gradually replacing people in areas that were so far considered as human domain. For example, since 2014, Facebook is able to recognize faces on human performance level, and computer vision technologies are only improving.

Sergey Nikolenko

Chief Research Officer, Neuromation